Egocentric computer vision – a giant leap

Artificial Intelligence can digest and codify the information sighted humans take for granted in our day-to-day processing. But how can we capture the huge volumes of visual data a person experiences in a day? Researchers at the University of Bristol have contributed to an ambitious new project to address this challenge.

From the moment we open our eyes in the morning our brains are processing visual information. This can help us move us safely through the world, understand people, manipulate objects and perform tasks ranging from the mundane (opening a door) to the complicated (playing football with friends).

Inspired by University of Bristol’s successful EPIC-KITCHENS dataset, a consortium of 84 researchers, from 13 universities in partnered with Facebook AI to create EGO4D, a massive-scale dataset of footage captured through the eyes of 923 people. The 3,670 hours of footage from the ‘egocentric’ perspective (ie from the viewpoint of a person) will help researchers to make advances in computer vision. EGO4D aims to advance research in augmented reality, understanding social interactions, assistive technology and robotics.

Headcam footage- from the Outer Hebrides to Japan

Each of the 14 research partners collected data around their geographic location. The University of Bristol is the only UK representative in this international effort. At Bristol, we posted cameras to 82 participants who captured footage of their chosen daily activities.

PhD alumni Jonathan Munro explains: “Using Zoom I would brief participants about the project, its goals and collect informed consent. Then I would take them through a quick demo of how to use GoPro footage, head strapped, to record and retrieve videos. Participants were encouraged to collect activities they naturally do – some recorded practicing a musical instrument, others would capture gardening, grooming their pet or watering their plants. We posted cameras all around the UK including one to a participant in the Isle of North Uist in the Outer Hebrides”.

In total, 270 hours were recorded in the UK (75 per cent around Bristol but footage was also captured from the rest of England, Scotland and Wales), and released under GDPR rule.

A diverse dataset

Attempting to acquire data that captures the diversity of human experience needed more than geographical spread.

Prof Dima Damen notes: “Some people had related jobs such as photographer or software engineer, but others didn’t. The wide range of participants included a book seller, a musician and a carpenter as well as those who had retired. We are grateful to every one who took the time to ensure our data is diverse.”

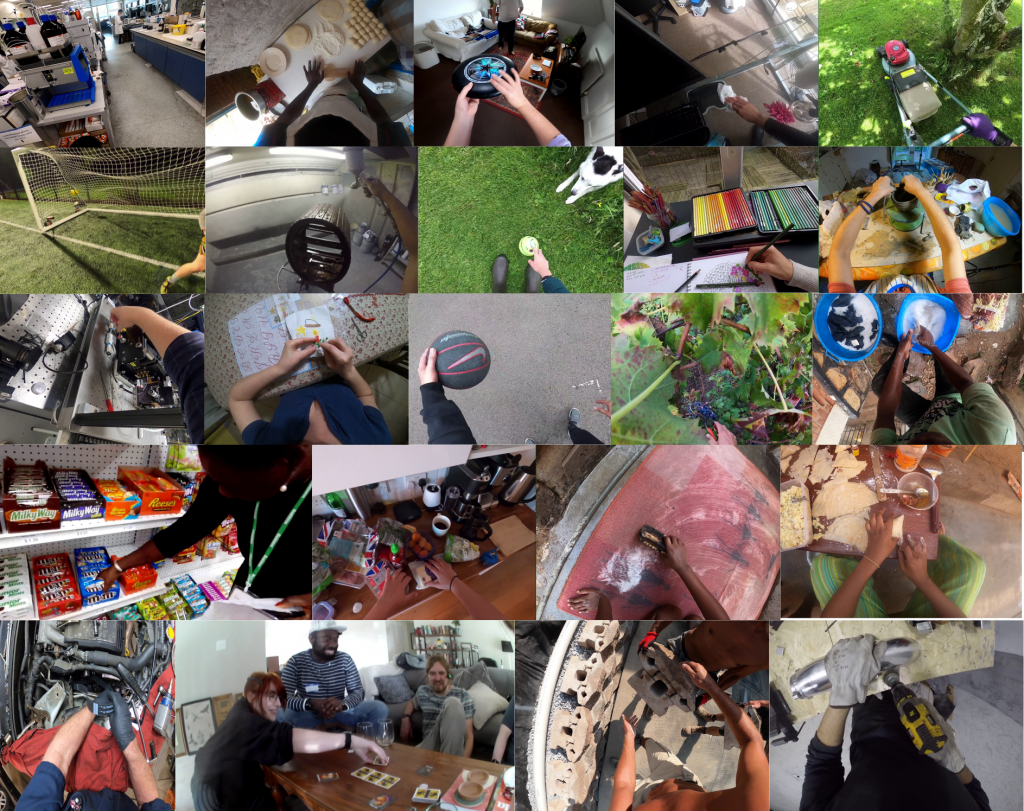

In the UK, 55 per cent of our participants were female. The variety of our footage speaks for itself; the collage above demonstrates some of the activities recorded by the University of Bristol as part of our contribution to the EGO4D international effort.

In addition to the captured footage, a suite of tools is available to help researchers test their work using the dataset. These benchmarks are problem definitions along with manually collected labels to compare models. EGO4D benchmarks are related to understanding places, spaces, ongoing actions, upcoming actions as well as social interactions. So, for example, researchers looking at episodic memory can use the benchmark to see if (or how accurately) their AI can predict what will happen next.

Privacy and ethics

Naturally, when asking members of the public to record footage of their lives, privacy and ethical standards are critical. All camera-wearers selected activities that they would capture from a predefined list and participants had control over what activities were captured in their footage. As well as approval from our Research Ethics Committee and getting informed consent from all participants (standard procedures in academic research), participants had the option to review their videos and delete all or parts of them. However, the responsibility of de-identifying any data lies with the researchers.

Find out more:

Videos were reviewed by the researchers, and any identifying information (eg a car number plate, accidental capture of the face, mobile phone messages or an address on an envelope) was blurred using advanced technology. At the University of Bristol, we worked closely with Primloc’s Secure Redact, who customised their solutions for our needs.

What does the dataset mean for the public?

Research using the dataset will provide a test set to applications that can answer daily questions such as:

- Did I add salt to my meal?

- Who did I meet in the park?

- Where did I leave my keys?

The researchers hope that these will be integrated into our future.

“You could be wearing smart augmented reality glasses that guide you through a recipe or how to fix your bike – they could even remind you where you left your keys,” said Principal Investigator at the University of Bristol and Professor of Computer Vision, Dima Damen.

What’s next for EGO4D?

The paper describing the project has been accepted for presentation at the highly prestigious international conference on computer vision and pattern recognition (CVPR) to be held in New Orleans in June.

Alongside the conference, a series of technical challenges will be set with winners announced at the Joint 1st Ego4D and 10th EPIC workshop alongside CVPR https://sites.google.com/view/cvpr2022w-ego4d-epic/ .

EGO4D is also now available for download by international researchers at https://ego4d-data.org/#download .Please check the license terms for permitted uses and restrictions.

Further information

Any researcher seeking access to the data must review and formally assent to license terms imposed by each EGO4D partner, which clearly delineate permitted uses, restrictions, and the consequences of non-compliance. Access to the dataset is only granted after each university reviews these commitments, through unique credentials which expire after a specified period. Storage of EGO4D data must comply with GDPR and other regional requirements.

For updates and more information, please visit ego4d-data.org/